Recipes for Claude-maxxing

the best job in this timeline

The newsletter for the technically curious. Updates, tool reviews, and lay of the land from an exited founder turned investor and forever tinkerer.

Hey folks,

You can now share your Claude Code setup with others using “Claude Code Plugins”. Plugins combine slash commands, subagents, MCP servers, and hooks that you have set up into a single JSON. You can publish a set of plugins as a marketplace and let your team members or anyone install them.

I think this is supposed to be cool but I don’t think it is - for a while people have been sharing their own commands/agents/etc in GitHub repos for others to copy in their workflows. This just feels like a slightly easier way to bulk download them. (It also feels like the days of people selling Notion templates).

And because so many CLI tools are built similarly, these can just be ported across to any CLI you use. It didn’t take long for Ian Nuttall to create this, which lets you easily install as bundles or individually from marketplaces into any CLI.

What I do think is cool is reading the different docs people create to enhance their coding workflows. If you’re paying attention, the files are often just really clear instructions for an agentic tool to follow. I’m again finding myself trying to code a tool only to end up with a simple markdown file that gives explicit instructions to an agent to follow instead. Apps are specs.

Gemini CLI also launched Gemini Extensions, which package custom commands, MCP servers and specific files into a single JSON config. (again, see Ian’s tool to easily port into any CLI)

OpenAI is aggressively ramping up where it gets compute from—including deals with Google Cloud, Nvidia, AMD and now, chips designed by OpenAI itself in partnership with Broadcom. They’ve been working together for 18 months already, and will start deploying 10 GW of compute late next year.

Slack’s new platform gives AI agents secure, real-time access to team conversations via RTS (real-time search API) and MCP, enabling context-aware workflows. Launch partners like OpenAI, Anthropic, Google, Perplexity, Dropbox, and Notion have built new Slack-native apps.

Clarify is the autonomous CRM that runs itself. It logs calls, enriches contacts, and updates deals—so you can focus on selling, not systems. Built for fast-moving GTM teams who hate admin work. ⚡ Start for free.*

Ever wonder how this newsletter gets made? Shanice shared what goes on in a day in the life of Ben’s Bites.

🌐 What I’m consuming

AI memory explained: SuperMemory MCP for Cursor, Claude & Windsurf.

Why everyone will be talking about Harness Engineering in six months.

Technological Optimism and Appropriate Fear - What do we do if AI progress keeps happening?

The case for Supermemory is the case for solo founders.

How Codex ran OpenAI DevDay 2025.

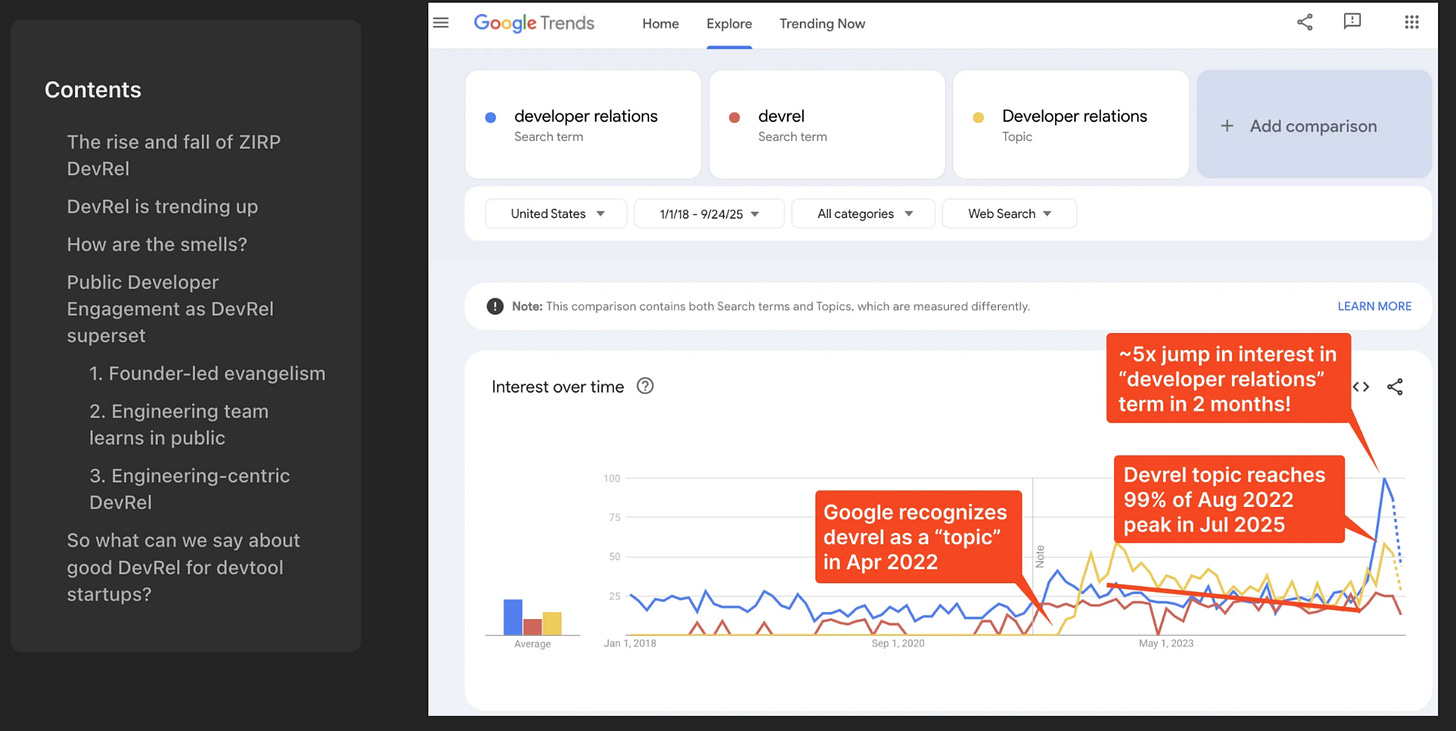

DevRel is unbelievably back. I saved this then surprisingly saw my name mentioned in the post (ty swyx!) - I think dev rel is the best job, especially in today’s world. A lot of the functions are really similar to being a founder; you speak to your customers every day, listen to & implement feedback, understand your product inside and out, know whats on the roadmap, why certain technical decisions are made and you’ve got to be in charge of growth. I’m going to start talking about little tools I’m making to help me in my day to day at Factory, like;

ask-factory - i have the full codebase and our docs on my computer (they get auto-updated from github every hour). So when a user asks a technical question or ‘is this workflow possible’ I can query this tool and it’ll look up the code and docs plus linear issues on whether something is already down as a bug or new feature on the roadmap.

linear-cli - this is just a simple cli tool that uses my linear api key and searches linear.

release-notes - this is a way to generate release notes from our latest releases for our changelog (still in progress!)

These tools aren’t really tools at all… they are instruction documents. e.g. ask-factory has instructions to say, look at the factory codebase repo and docs (linking them), then run search queries in parallel across both to get an understanding of which areas the query relates to, then check linear to see if anything relevant in there, then put together in an answer format like '[example]’. Because when you use an agentic tool like Droid, Claude Code, Codex etc, they can follow instructions really well and have their own tools at their disposal. Fwiw I get way better results currently using gpt-5-codex.

⚙️ Tools and demos

Tasklet - An AI agent for automating your business.

Exa Fast and Deep - Two new search API for your AI products.

AI workflow builder by n8n - Build workflows, nodes and structure from your prompts in English.

Julius AI now connects to your SQL Server and writes SQL for you to analyse performance, adoption and other KPIs.

NotebookLM’s video overviews can now use images generated by Nano-Banana and a shorter format called Brief.

YC unicorn predictor - Are you making something people want? (I think the biggest change to YC’s tagline in the future will be ‘Make something agents want’)

🥣 Dev dish

nanochat - A minimal repo from Karpathy to train an LLM from scratch, and then take it through post-training with SFT, RL and run it in a ChatGPT like interface. (discussions on X)

ai-sdk-tools - Essential utilities for building production-ready AI applications.

Beads - A drop-in cognitive upgrade for your coding agent of choice. (read more)

🍦 Afters

Dwarkesh Patel and Stripe Press have a new book, The Scaling Era: An Oral History of AI 2019-2025.

This 350M params model from Liquid AI performs similarly to GPT-5 when extracting PII from Japanese texts.

new research: you can just ask an LLM if a certain demographic would purchase your product and get reliable answers.

That’s it for today. Feel free to comment and share your thoughts. 👋

Read about me and ben’s bites

📷 thumbnail creds: @keshavatearth,

I really wished this was about how to manage the newly added limitation to Claude Usage.