open, world models

how i made an archive for ben’s bites

The newsletter for ai builders of all levels. Mini-tutorials, tool reviews, and lay of the land from an exited founder turned investor and forever tinkerer.

Hey folks,

OpenAI finally released two open-weights models: gpt-oss-20b and gpt-oss-120b. These are reasoning models with mixture-of-experts architecture, meaning only a fraction of those parameters are active when you’re chatting with them (most models these days are MoE). These models are slightly worse than o3-mini and o4-mini, smart in reasoning but don’t know much on their own, aka built for making tool calls and agentic workflows.

They are available to download on HuggingFace and other providers, but the easiest way to chat with them is to go to gpt-oss.com. I know everyone is hyped by these models, but I tried running these models on a year-old M3 Air (via Ollama) and I am getting ~10 words per minute (o3 is roughly 800/min). If a model doesn’t run on a device like this, it can’t be used to build applications that are local first.

That’s not the end of the world, though. I don’t really care about sharing my data with the models. The other application open models unlock is that companies can fine-tune them on a specific task and improve the model’s performance on it massively. So, that’s a possible option if you want to use these models for your company. And oh! these models are text-only.

resources to learn how to use these models

examples of what can be build with open models

a guide on how to fine-tune gpt-oss.

Plus Matt made oss-pro - it selects the best answer from 10 runs of the model (hosted on groq) on your prompt, which is what o3 Pro is rumoured to be.

btw, gpt-5 is coming today.

But that’s not it today. Google previewed Genie 3. If you remember Genie, it is their model creating game environments that you can interact with. This new model went from glitchy games to realistic video-like environments where you can make the scene behave as you wish, move within it and the biggest upgrade: it remembers up to 1 minute of past activity in the environment. This breaks my mind—it doesn’t feel possible, makes you wonder if we are living in a simulation. It’s another sora-like moment (and we had indistinguishable AI videos 15 months later). Sora was supposed to be “a world model”, but Genie 3 looks more like it.

Besides this, Google added some new features to Gemini:

Guided learning - Just like OpenAI’s study mode, this helps you learn, come to answers. More visual than study mode.

Storybooks. - Create 10-page storybooks with images and audio. Try it here. tbh, Gemini’s team is leading on finding new formats—audio overviews, deep research, and now storybook. I turned Tuesday’s newsletter into one.

and Jules, Google’s AI coding agent, is now out of beta. The web apps for both Jules and Codex are buggy as hell, but it’s still better than Codex in my experience.

And Anthropic released Claude Opus 4.1. It’s a minor bump against opus 4 but a significant improvement in terminal-based coding (39.2% → 43.3%)

Everyone’s talking co-pilots. BMC Helix built a full crew: Agentic AI gives you a team of autonomous IT agents that don’t just chat, they act. File tickets, resolve issues, close loops, plug into your stack—no rip-and-replace. Just better ops, out of the box. → Meet the agents*

Shopify released three new tools for building shopping experiences with agents. Their article on using MCP to put together shopping-specific UI on demand is worth reading.

*sponsored

🌐 What I’m consuming

Stripe’s analysis of payment data from top 100 AI companies on their platform.

GothamChess’s commentary on the chess tournament between top AI models. I have timestamped it to the match between Opus 4 and 2.5 Pro. It’s so hilarious watching him react to all the “justifications” these models give for their moves.

Learn to use Claude Code - from basics to MCP integrations, Hooks and more.

A cheeky pint with Anthropic’s CEO Dario Amodei

⚙️ Tools I’m looking into

AssemblyAI is the fastest way to build scalable voice-powered apps. Get your API key + 330 free hours.*

Eleven Labs Music - Make the perfect song for any moment. They have an API too.

Lilac - Open-source tool that connects your data scientists to GPUs anywhere—on-prem or cloud.

Endex - Excel-native AI agent for financial modelling and data analysis. (raised $14M)

Maybe - Connect QuickBooks and get to know your business in plain English.

Kombai - AI agent for complex frontend task.

Notion is making the entirety of the tool accessible to Notion AI. i.e. notion AI could edit multiple pages, sync them, fix databases etc.

*sponsored

🥣 Dev dish

AutoDoc by Cosine - Automatically generates and continuously updates documentation for your projects.

New in Claude Code: clear old tool calls to increase session length, PDF support and automatic security reviews.

MCP.RL by OpenPipe - Teach your model how to use any MCP server automatically using reinforcement learning!

Eval Protocol - OSS library and SDK for making model evaluations work like unit tests.

Vercel MCP server - read-only permissions for your projects, deployment logs and Vercel docs.

Groq Code CLI - A lightweight CLI tool that you can customise to build your own.

🛠️ how i made an archive for ben’s bites

— by Keshav Jindal | → read in a new tab

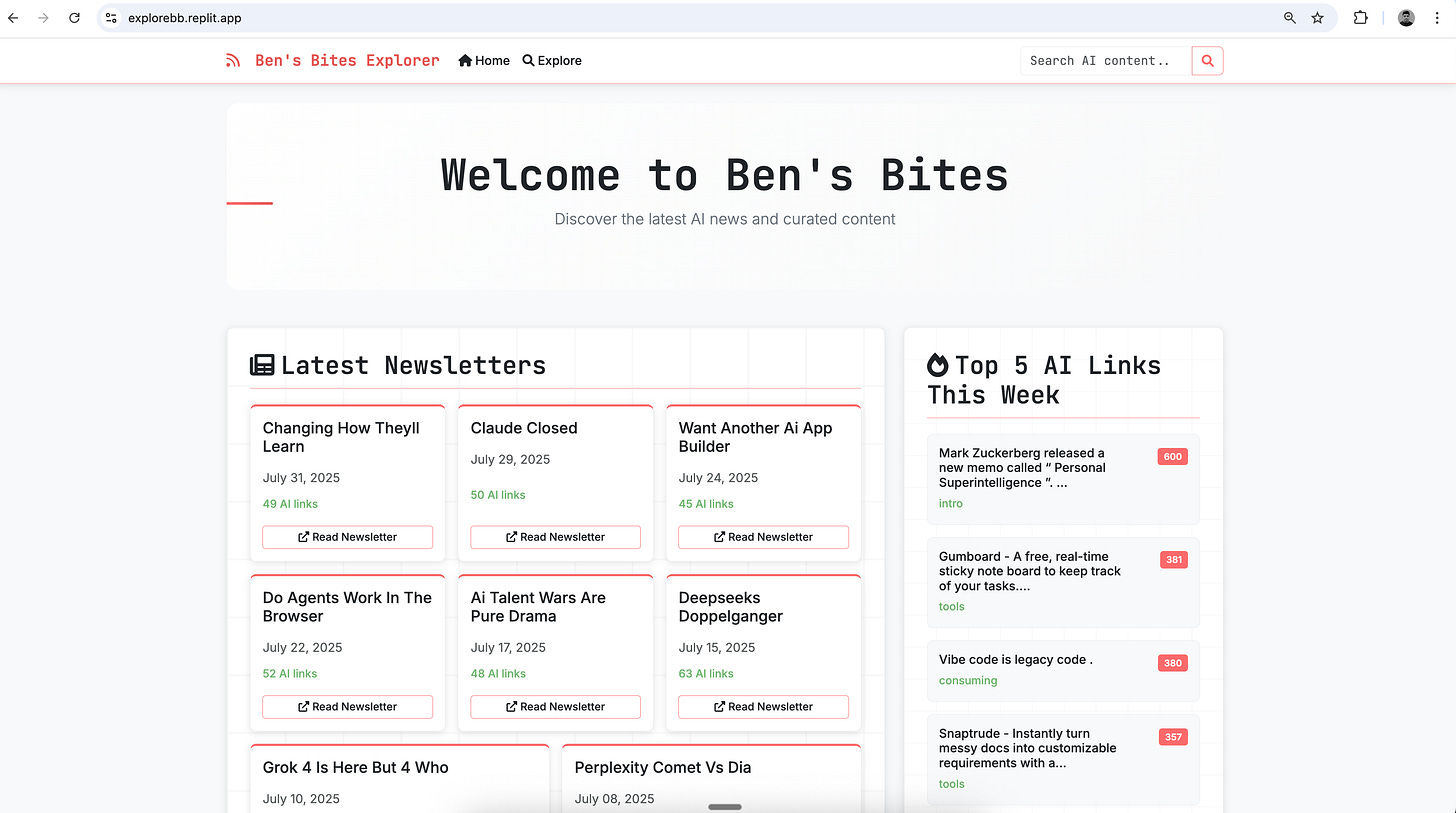

A couple of weeks ago, we put out a website. It’s a simple-looking thing: a search bar, a list of links, some filters. It lets you explore everything we’ve ever shared in the Ben’s Bites newsletter and see how many times each link was clicked.

This website is far from perfect. It has UI inconsistencies that still irk me, some messy data, and it doesn’t update automatically; I have to manually run a script and copy a file over every few days. By any professional software engineering standard, it’s a bit of a hack.

Yet, it’s a very good example, a tangible, working artifact, that shows what you can do with AI-assisted coding. This writeup is a breakdown of building that website and my thoughts on how to think about building things in this new era.

We'll cover the entire journey, from idea to a deployed web application:

Finding the Private API: How we used standard browser tools to find the data we needed, even though Substack doesn't offer a public way to get it.

Automating an Authenticated Browser: The non-trivial process of getting a script to act like a logged-in user, including the technical hurdles and how we solved them.

AI-Assisted Scripting: The iterative back-and-forth with Claude Code to write, debug, and refine the Python scripts that gather and process our data.

Designing and Building the Frontend: How we used one AI (Gemini) to scope the product and another (the Replit agent) to build the entire web interface.

The Reality of the Workflow: An honest look at the manual steps still required to keep the archive updated.

So, let’s set the stage.

If you don’t know us, Ben’s Bites is a twice-a-week newsletter that compiles what’s hot in the AI world and our take on it. We use “Substack” to write the newsletter. As we’ve published hundreds of issues, we've accumulated thousands of links. A recurring request from our readers has been for a searchable archive—a single place where they could find every tool, article, or paper we've ever mentioned.

Substack, unfortunately, doesn’t have a public API (i.e. a way to pull data about your publication by making a request to Substack’s servers), but I can see that data in my dashboard when logged in on my local browser.

So, how do we get from data locked behind logins and make it public for users?

🍦 Afters

OpenAI is giving federal agencies access to ChatGPT Enterprise for $1/agency for the next year.

Applied AI Hackathon with $100k in prizes, hack for 12 hours this Sunday in SF.

That’s it for today. Feel free to comment and share your thoughts. 👋

📷 thumbnail creds: @keshavatearth

Fibery had a very similar to Notion "AI Build Mode" implemented months ago. They do a lot of cool stuff with AI in a PKM/database context before Notion does actually. And I don't think I've seen mention of them in Ben's Bites. They're small but their work is great, worth paying attention to IMO:

https://community.fibery.io/t/june-19-2025-gantt-view-fibery-ai-build-mode-import-data-even-if-you-are-not-a-creator-and-more/9012#p-33919-fibery-ai-build-mode-experimental-2