Liquid Glass blurs Apple Intelligence

will AI slop win social media and can LLMs actually reason?

I write a newsletter about startups and investing—for ai builders of all levels.

I record mini-tutorials, review tools I’m testing, share my insights from an exited founder turned investor.

Hey folks,

we were all looking to apple to see if they cooked or if Tim is. after WWDC, i still do not know. until we’re actually using all the things it feels easy to criticise - but rightfully so, because they need to actually ship what they promise. ahem, swift assist wya?

i use spotlight a fair bit (mostly for calculations tbh 😅) and am always baffled at how awfully bad it is. lets see if the new ‘ai central command’ is better. a lot of people use raycast for similar things (i have tried but it never stuck with me, and i’m always on the edge of wanting to try again) - i suspect i’ll review both when i get my hands on apple’s.

visual intelligence is also interesting but until i’ve played around, who knows how useful it is (side note: has anyone purposefully opened the apple writing ai thing?!)

BUT it all comes down to if the local models are good enough. and that’s where developers come in, these models will be available via the “Foundation Models” framework.

i invested in mirai - blazing fast on-device models because this stuff is a really big deal.

so if you’re building around the apple ecosystem (or anything in infra/devtools) i’d love to chat and potentially invest. ping me. if you’re interested in backing my next fund, to back these builders, get in touch here. ❤️

🔎 News worth knowing

Google gave Gemini 2.5 Pro another capability bump. It’s still in preview, but this variation will be the one that becomes the stable version. It is crushing coding benchmarks (better than o3) and has the fun creativity that the original 2.5 Pro had. But I miss the natural reading thinking traces in the Gemini app—now it has those weirdly summarised thoughts like o3.

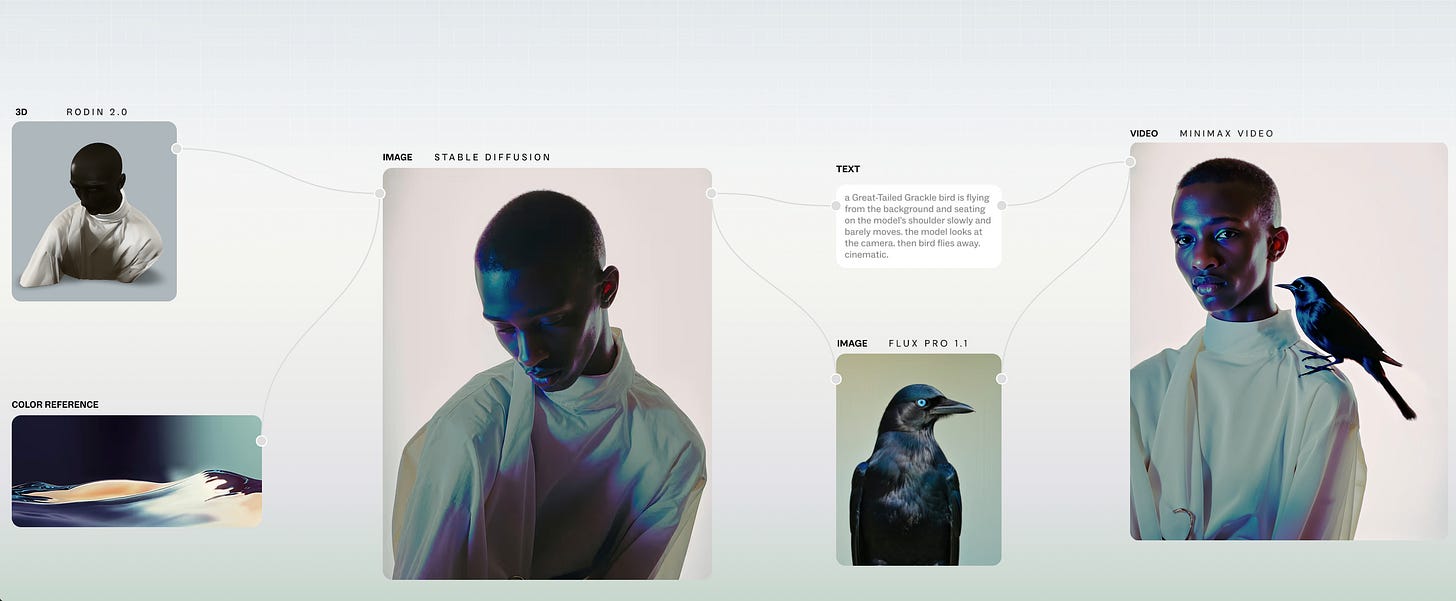

Everyone was so worried about AI slop, but AI videos are ripping on social media. I’ll maybe write something longer on it, but the tools behind this AI content engine are getting an upgrade:

Veo 3 has a new fast mode. Gemini Pro users get 3 videos every day with Veo 3 fast now.

ElevenLabs launched their V3 model with multi-speaker dialogue, audio tags, and 70+ languages for speech generation. (try here)

Higgsfield and Suno are partnering to make AI music video with full range motion.

OpenAI has been quietly shipping upgrades all across their toolkit, including a better Advanced Voice Mode (example), tools support in their evals, and rumours of an upcoming 80% price reduction for o3—all while making more than $10B annually.

AssemblyAI released a new real-time speech-to-text model. Universal-Streaming is purpose-built for voice agents. The speech-to-text API delivers 300ms latency with fast, immutable transcripts, superior accuracy, and intelligent endpointing. Try it for free today.*

Projects in Claude can now accept 10x more content. Still not able to edit the docs you upload to the knowledge base though… :(

*sponsored

want to partner with us? Click here

🌐 What I’m consuming

This report claims that asking reasoners to “think step by step” only increases costs, not performance. But I think there’s merit to prompting these models elaborately to follow a custom reasoning policy beyond a simple CoT prompt.

Jenson says the UK lacks digital infrastructure as Keir Starmer pledges £1bn for AI. The UK Gov is also partnering with Gemini to build a tool called Extract that turns old planning documents like blurry maps and handwritten notes into clear, digital data.

Just before WWDC, researchers from Apple released this paper claiming that reasoning models don’t actually reason. But turns out, the models were failing a lot, partially because they weren’t thinking for long enough. I know nothing about research, but we know these models are better. Why not just use them to build a better Siri (which again was missing from WWDC)?

Here’s how I’m teaching my kids to use AI. This came from one of our members - it’s something I really want to think about more. I’m pro-AI for everything and want them to be AI native…but they’re only 2 (on thursday!!!)

Reverse engineering Cursor's LLM client.

Seed rounds of all the AI Unicorns founded post-transformer.

Authors are now asking: How do I let AI train on my books?

A no hype vibe coding tutorial in 30 mins (BB members got this tutorial in March iykyk)

⚙️ Tools I’m tinkering with

Vibe Meter shows your AI spend right on your macOS menu bar, updated in real time, so you know when to pump the brakes (or should you?)

Huxe - Your personal audio companion, built by the team behind the og notebookLM experience.

Spreadsheet Agents by LlamaIndex - Data transformation and QA over messy Excel sheets.

Loop by Braintrust - The AI agent for automatic prompt, dataset, and scorer optimisation.

Granola now allows you to upload files as extra context to your meetings.

Boardy AI - This AI tool intros you to others in its network.

Agent Flow - An open-source alternative to Gumloop.

Cart AI - Stay on budget while you shop.

Cosmic - Build and deploy NextJS apps with database, payments and more.

Exa research - Agentic search that doesn't stop until it finds what you need.

Weavy AI - create scalable workflows for professional creative work.

🥣 mcp memo

MCP vs API - Quick read on the differences between these two.

Some new MCP servers that are on my radar:

container use - run multiple coding agents in separate environments

ask-human mcp - let your AI ask you questions while it keeps working

claude composer - small enhancements to claude code

slack-mcp - this one is fairly simple but powerful—get all channels and get conversation history of a single channel

inked - memory management with Claude apps

supermemory - makes your memories available to any LLM

Not an MCP server, but this open-source SWE agent looks interesting.

How I gave Claude access to my browser with MCP. This one is interesting because it accesses the browser you are already logged into. Google’s Project Mariner is supposed to be similar. ChatGPT Operator, on the other hand, runs a new browser instance. Also, Vercel is making its Bot Protection kit available to all users for free—to block automated traffic that’s not malicious (which this MCP server will be able to bypass).

🍦 Afters

Anthropic has built special models for the feds. That explains why our tokens are being rationed.

This research paper improves inference speeds of AI models (especially in cases where long parts of input/output are used repeatedly).

RL tuning Mistral 24B on molecular chemistry beats other frontier reasoning models (blog).

That’s it for today. Feel free to hit reply and share your thoughts. 👋

Enjoy this newsletter? Please forward to a friend.