How a global company lets its employees build with 30+ LLMs

A telecom giant’s not-so-secret AI playground that turned 70,000 employees into copilot builders.

TELUS is one of Canada’s largest telecom companies. With more than 100,000 employees globally, it’s the very definition of an enterprise.

When it comes to AI, many enterprise companies seem to have the same cookie-cutter approach: deploy GPT-5, add some guardrails, and call it a day.

Not TELUS. Despite their size and all the complexities that come with enterprise-level ops, this global company is thinking about AI in a totally different way. And I want to share what they’re doing with you – I think there’s a lot to take away from their story.

What TELUS is doing with AI, in a nutshell

To overcome all the hurdles they faced when trying to integrate AI at a company-wide level, TELUS built its own switchboard-style application that runs dozens of LLMs at the same time.

It’s a comprehensive AI application built on these core functions:

Create customisable AI copilots for unlimited problem-solving

Base your AI copilots in your organisation’s distinctive knowledge

Manage and monitor it all from a centralised control plane

Integrate with external tools to extend knowledge base capabilities and enable agentic AI workflows

And it’s not limited to basic productivity tasks – users can tackle virtually any business challenge by selecting models, defining custom instructions, connecting to knowledge bases, and sharing specialised copilots across the organisation.

They’ve given it a name – Fuel iX Copilots – and it’s been such a success that they’ve turned it into a product for other companies.

How it works

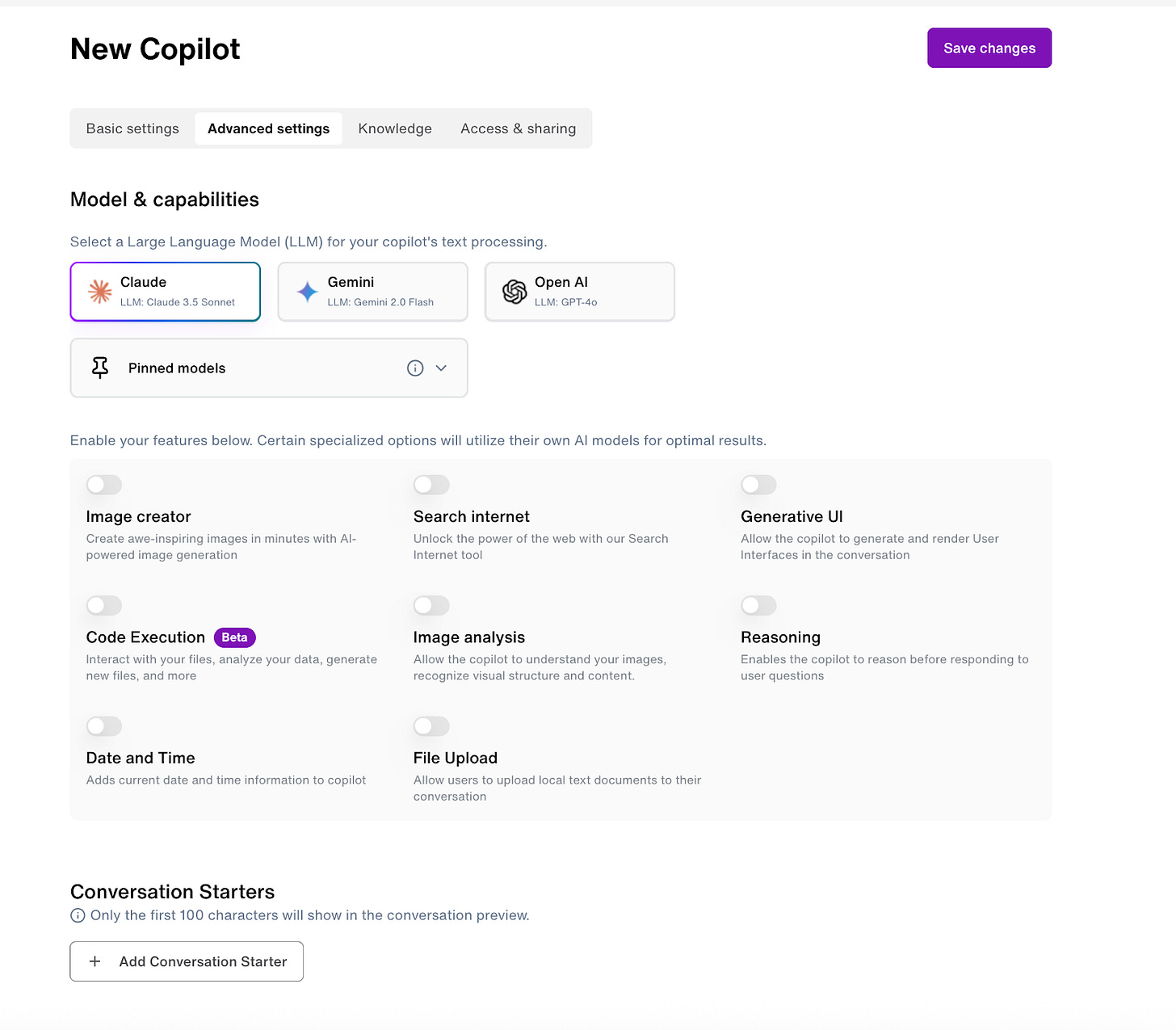

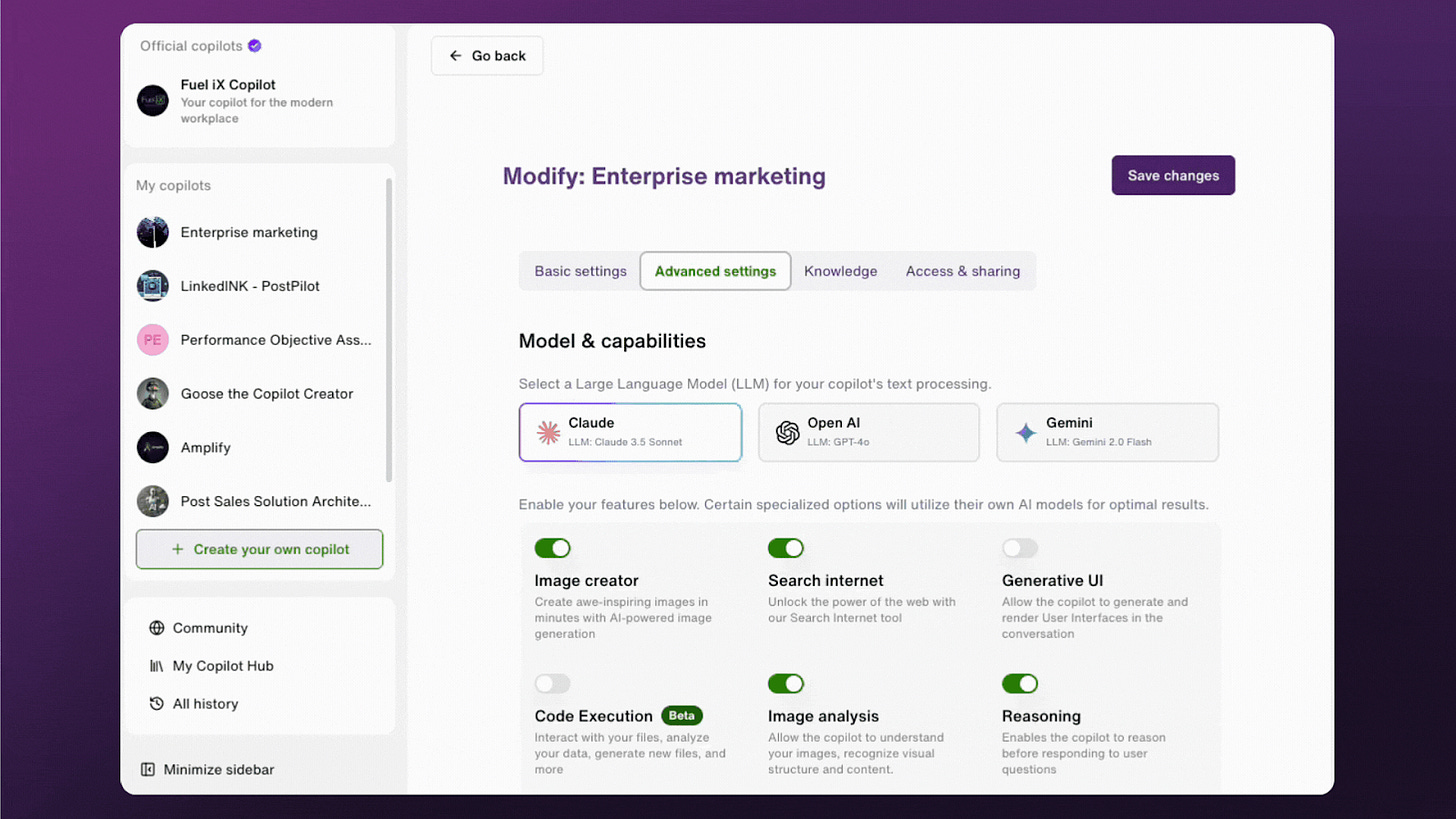

Users can pick from the newest models from Anthropic, OpenAI, and Google. The picker is pre-grouped by provider, so TELUS always points to the newest model release without touching pipelines.

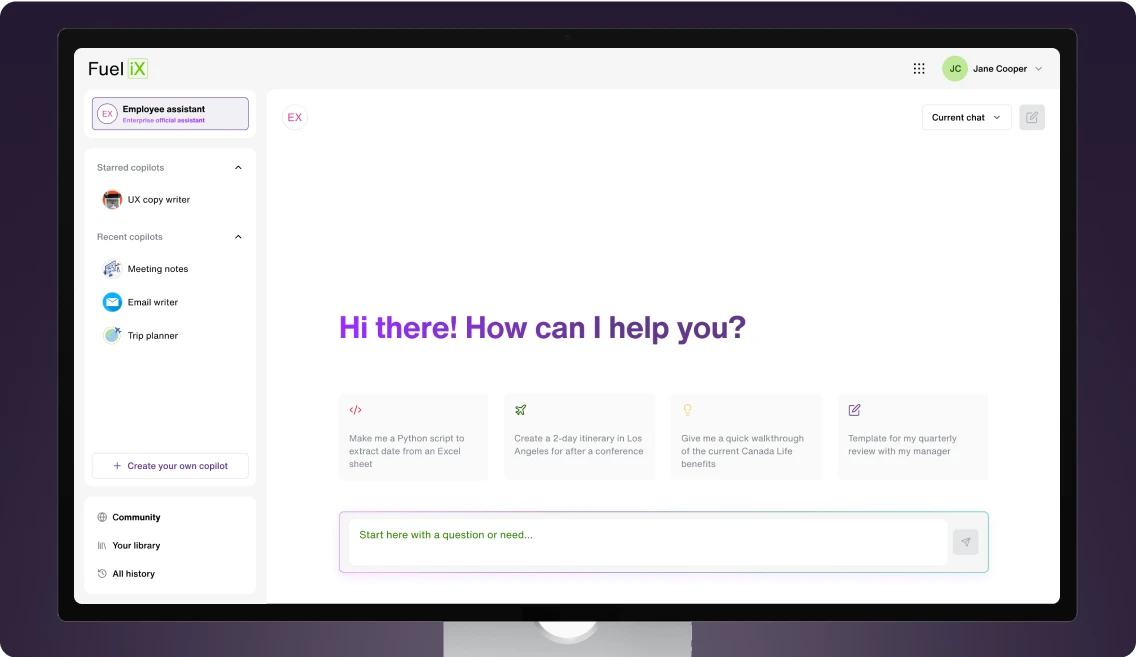

On the front-end, Fuel iX Copilots is a chat assistant that can be deployed anywhere throughout an org.

Its primary features include:

Drag-and-drop RAG builder – This lets teams upload docs and databases to create an instant private knowledge base.

Model marketplace – Users can switch between 30+ LLMs with a single toggle.

Unified security layer – SSO, audit logs, DLP. Prompts are stateless; a session layer keeps conversation & document data continuity inside TELUS while the model forgets everything after it replies. That single session token works across Claude, GPT-54, and Gemini, so compliance is reviewed once – not for every new model.

Central analytics & billing – For tracking usage, cost, and ROI per copilot, per model, or per department.

Why they built their own thing

Enterprise AI implementation is rarely straightforward. Between data governance and the sprawl of operations, many companies end up with fragmented deployments, lacklustre adoption, or clunky tools that solve for legal teams but frustrate everyone else.

TELUS faced all of that, but instead of pushing ahead with off-the-shelf copilots or waiting for the dust to settle in the LLM space, they created their own solution.

The Copilots application now gives users access to over thirty LLMs in parallel, so they can:

Upload internal docs and spin up custom copilots quickly

Share those copilots with their teams, or keep them private

Choose which models to use and apply specific instructions

Monitor usage, permissions and compliance in one place

Everyone in the team can build and share AI tools amongst themselves that fit their specific use cases, without waiting for central IT or budget approvals. Translation, research, IT queries, policy lookups, and onboarding help are just some examples.

It’s also fully governed by a central control plane, meaning TELUS gets visibility into how teams are using AI, while still enabling decentralised innovation.

Today, 50,000 TELUS employees use Fuel iX to pick the right model for the job without needing IT to rewrite pipelines or renegotiate pricing. As a company they’ve saved more than 500k work hours (more than 62,000 working days) so far, and the numbers are improving all the time.

Results that prove the platform works

Some real examples of how Fuel iX Copilots is saving time and money for companies:

An internal project planning copilot:

Brought timeline estimates down from 3 hours to 90 seconds, saving TELUS $2 million+ in efficiency gains.

A retail-facing AI tool:

Integrates live product info across 300+ stores and has saved ~$1.4M annually by cutting down interaction time.

A network engineering assistant, built into Google Chat:

Helped generate 6,000+ ad hoc jobs and has driven $7M+ in OPEX savings.

A contact centre copilot:

Saves 80,000+ training hours, $1M in OPEX savings, and handles 30,000 customer queries a day.

Bottom-up, not top-down

One of the smartest moves TELUS made was letting people play in a safe playground.

Early on, they launched a sandbox environment with open access to generative AI tools for 30,000 employees. Teams could experiment, test ideas, and build their own copilots without needing to be technical.

That flipped the usual enterprise AI script on its head. Instead of pushing rigid tools onto teams, TELUS watched departments build what they needed from scratch, and only scaled the things that stuck.

Adoption grew organically with encouragement from leadership. Resistance gave way to curiosity. And that curiosity turned into capability.

Lessons to take away

If you’re building AI workflows inside a large org, TELUS has some advice:

Instead of getting locked into one model, use a flexible, multi-LLM platform that can adapt to new tools.

Think like a product team – design a system that people will want to use.

Use only straightforward interfaces. A good copilot builder should be as plug and play as a Canva template.

Pair flexibility with strong governance. TELUS’s control plane ensures security and compliance without slowing innovation down.

In a space dominated by proof-of-concepts, piecemeal deployments, and tools not fit for the enterprise, TELUS built a product that actually delivers control and confidence to business level decision makers. Meanwhile, the people doing the work get to do it better and faster.

And now, they’re packaging it up and offering it to other enterprises. If it can work at TELUS scale and in highly-regulated environments, it can surely work anywhere.