ChatGPT vs OpenAI API: Differences explained

We break down the similarities and differences between OpenAI API and ChatGPT the app.

If you’ve ever found yourself wondering: what’s the difference between ChatGPT and the OpenAI API, this blog post will explain.

Maybe you’ve noticed that there’s the ChatGPT app and also lots of other tools and apps that use ChatGPT’s capabilities. Perhaps you’ve built a workflow in Zapier that connects ChatGPT and you’re wondering exactly how that works.

In this post, we’ll explore answers to these questions:

What is OpenAI?

What is ChatGPT the app?

What’s an API?

Is the OpenAI API different from ChatGPT?

Bonus: Using the OpenAI Playground as an alternative to ChatGPT

Let’s dive in.

The early days of OpenAI

ChatGPT launched in November 2022, but OpenAI first came onto the scene with the release of its GPT-3 language model in August 2020.

Rather than a consumer-facing app, they first made this AI model available for other apps to integrate via an API.

What’s an API? It is an ‘application programming interface’—essentially a way of connecting apps so they can communicate with, and send data between each other.

One of the first really popular apps to integrate with the OpenAI API was Jasper. They released a writing tool that helped people write AI blog posts, social posts, emails and website content, via a Google Docs-like user interface.

💡 Jasper is still one of the best tools for a growing marketing team. Check out our tutorial on creating marketing content derived from your company's knowledge base.

Jasper was a hit and spawned several similar apps and tools. However, AI was still a niche field, with most usage coming from marketers and copywriters.

Then came ChatGPT…

The rise of ChatGPT

When launched in 2022, ChatGPT was an instant hit, gaining millions of users within mere months, and capturing a user base beyond just marketers.

At first, it was free for everyone but they eventually introduced a $20/m Plus plan, and more recently a $200/m Pro plan.

For this free or flat pricing, you get various degrees of access to their current language models, which go, in order of capability:

GPT-4o-mini

GPT-4o

o1-mini

o1

o3

The last 3 are their new generation of ‘reasoning’ models.

And, through the ChatGPT platform, OpenAI makes available other tools like:

DALL-E - their image generator

Sora - their video generator

Operator - their new AI-powered web task automator

Deep Research - an agentic model that can perform complex web research tasks

💡 If you’re keen to do a deep dive on using ChatGPT, check out our course: Learn how to use ChatGPT.

Using the OpenAI API

Just like it was at the beginning if you want to:

Build the power of these OpenAI language models and tools into your own apps, or

Add ChatGPT steps to your Zapier or Make automations for example

Then you can do that via OpenAI’s API, which is now available to everyone.

To get started, you need to go to

https://platform.openai.com/

and sign up or log in.

If you’ve already got a ChatGPT account, you can access the OpenAI API platform using the same logins, however the two work separately.

The first thing you need to do is get an API key here: https://platform.openai.com/api-keys. You’ll need this when you go to connect your app (or Zapier/Make) to OpenAI.

How pricing works for ChatGPT vs the API

Whereas ChatGPT has flat rate pricing that gives you limited or unlimited access to use their language models, the API is priced on a metered basis. You only pay for what you use.

The API gives you access to the same language models as ChatGPT (and a few more), but understanding how metered pricing works for them is a little more complex.

The main thing to understand is the concept of input and output tokens.

Input tokens are everything you send to the API. This will typically include the system prompt, your user prompt and, if you’re having an ongoing conversation with the API, a history of all your previous messages and the language models responses (this gives it overall context for new responses).

Output tokens are everything the language model sends back. The response.

It’s also important to note that one token does not equal one word. In fact, language models break down words into parts—these are the tokens.

You can learn more about tokens in this article.

To see how much OpenAI charges for each type of token, you can view their pricing—note that as the per token cost is tiny, the prices are per million tokens.

As you can see, compared to the flat cost of ChatGPT, if you’re using OpenAI’s language models via their API, it’s incredibly cheap.

💡 If you’re a big user of Slack (who isn’t?) then learn from our tutorial how you can build your own version of ChatGPT in Slack using the OpenAI API.

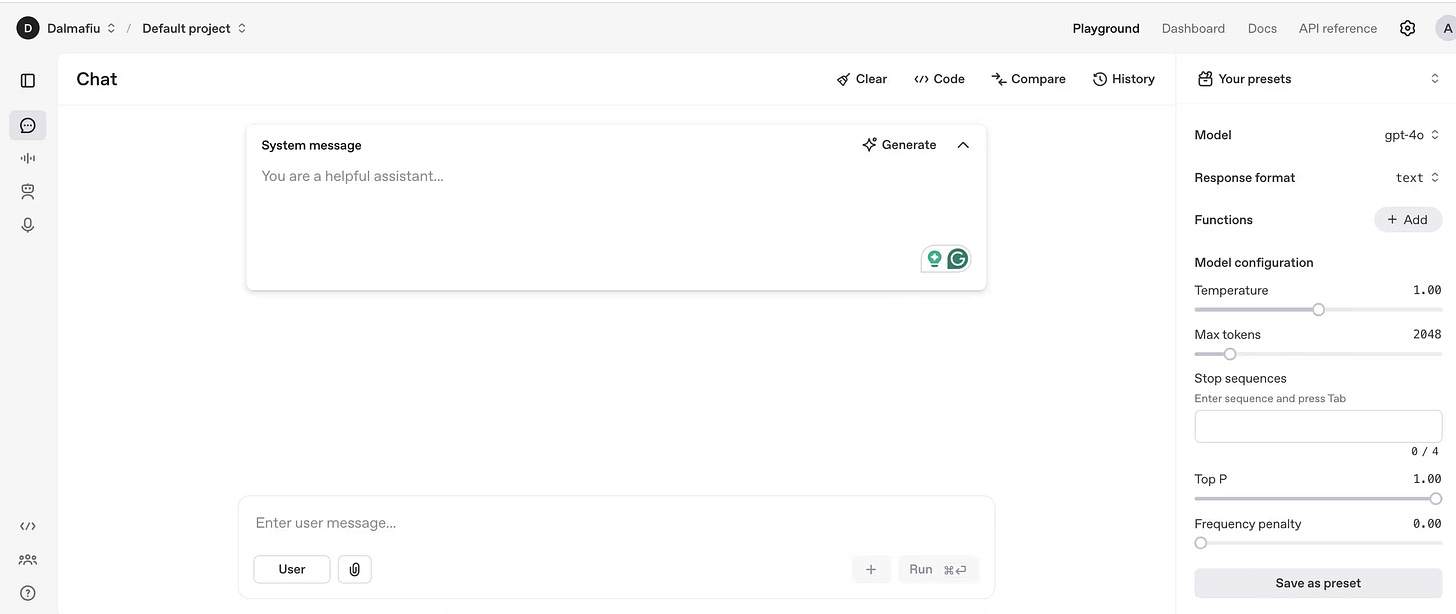

Using the OpenAI Playground as an alternative to ChatGPT

Say you want to test out some prompts before you build something using the OpenAI. It would make sense to use ChatGPT to do this—but if you want decent access to the more advanced models, then you’ll need a paid plan.

A cheaper alternative it to use Playground which is a bit like ChatGPT but suited for testing how the API would respond to certain prompts and configurations.

As you can see, it somewhat resembles the ChatGPT interface, but gives you options to set a system message (and overall prompt for the chat) as well as features like ‘temperature’ (how creative the model acts) and the max output tokens.

One of the key things with Playground is pricing—instead of having to pay a flat fee like ChatGPT, you just pay for input and output tokens as you do with the API. This means for basic conversations the costs will be miniscule.

This blog post was created by Andrew.